Testing Methods

User can access testing from:

User can access testing from:

- Agent edit screen (top right)

- Test Web Call button (recommended)

- Test Call button (phone call)

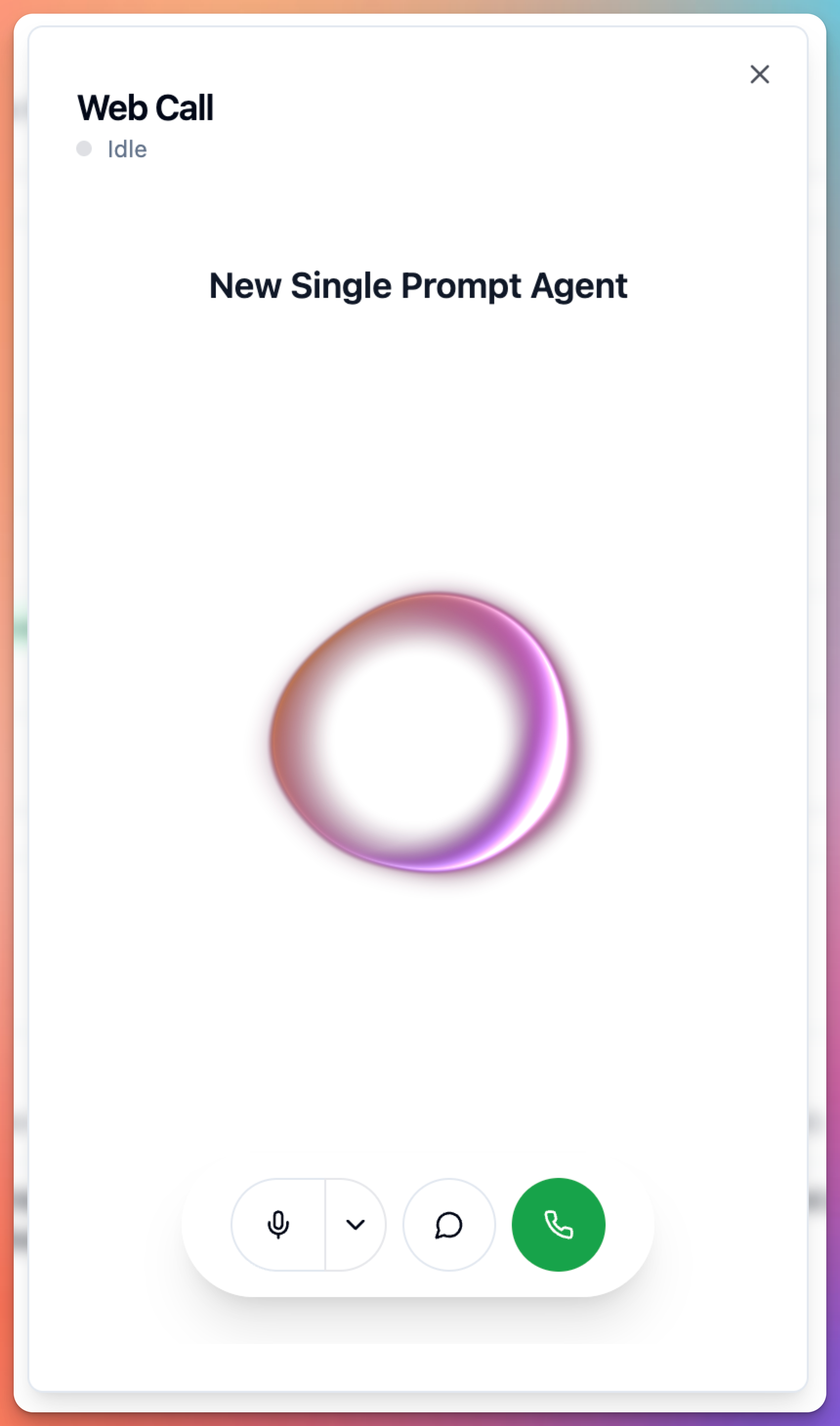

Method 1: Web Call Testing (Recommended)

How to Test via Web Call

User can start web call test:- Click “Test Web Call” button (top right of agent screen)

- Web call modal opens

- Click “Start Call” button

- Allow microphone access in browser

- Speak with agent

- Test conversation flows

- Click “End Call” when done

Web Call Benefits

User gets:- Instant testing (no dialing required)

- Browser-based (works on any device)

- Free testing (no phone costs)

- Quick iterations (test → edit → test)

- Same experience as phone call

- Full feature testing (tools, transfers, etc.)

Web Call Best Practices

User should:- Test in quiet environment first

- Use good quality microphone

- Speak clearly and naturally

- Test different scenarios

- Test edge cases and errors

- Test all conversation paths

- Test background noise handling

- Test interruptions

- Test unclear speech

- Test silence handling

- Test multiple languages (if multilingual)

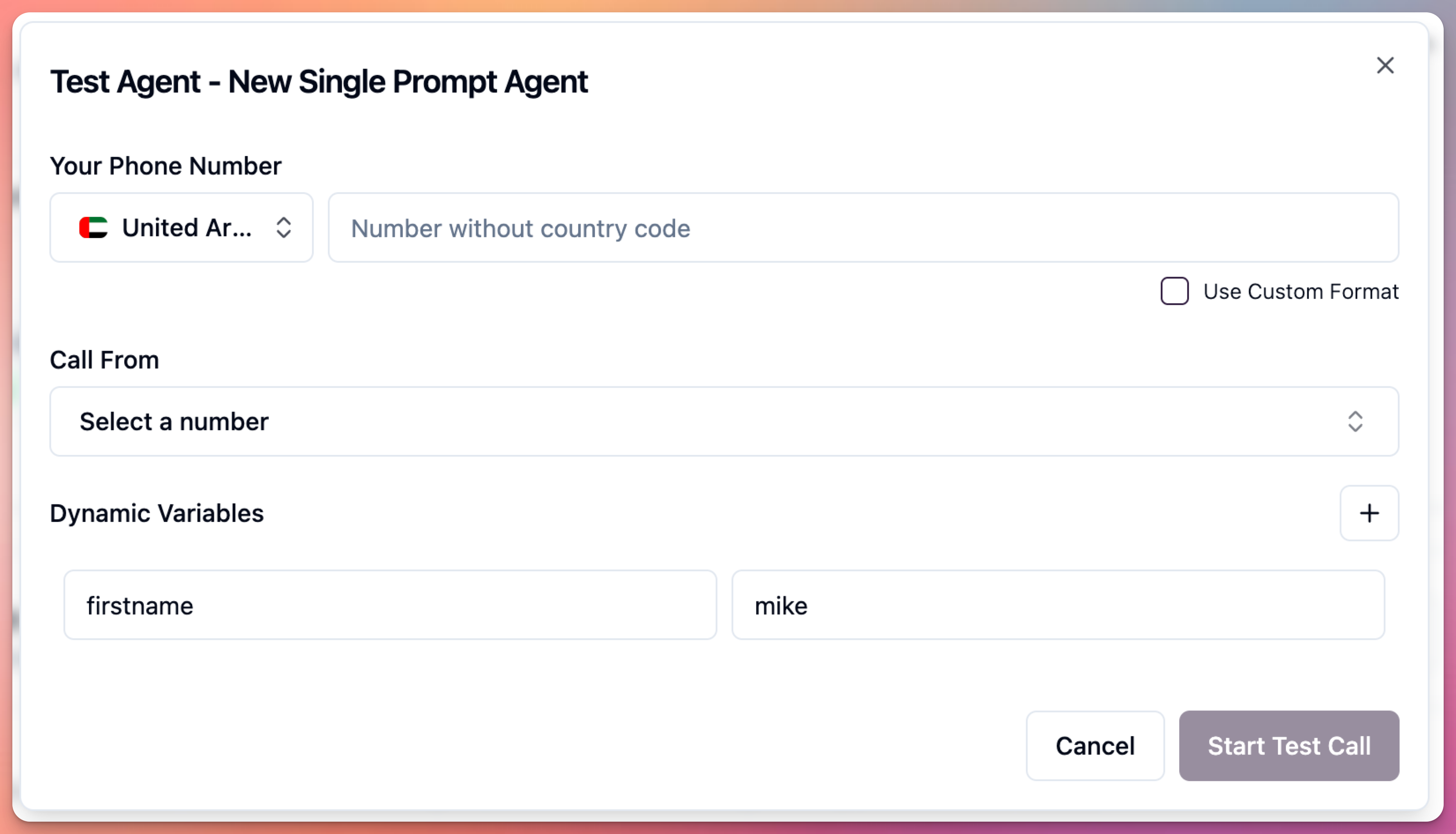

Method 2: Phone Call Testing

User can test with actual phone call:

How to Test via Phone Call

User can test over phone:- Click “Test Call” button

- Modal shows phone number to call

- Enter your phone number (optional: agent calls you)

- Call the displayed number

- Test agent conversation

- Hang up when done

Phone Call Benefits

User gets:- Real phone network testing

- Actual call quality testing

- Network latency testing

- Caller ID testing

- Phone-specific features testing

Phone Call Use Cases

User should test via phone when:- Testing phone number integrations

- Testing call quality on real network

- Testing with team members remotely

- Demonstrating to stakeholders

- Testing caller ID display

- Testing international calls

What to Test

Core Functionality

User must test: Identity & Personality:- Agent introduces itself correctly

- Tone matches configuration

- Personality is consistent

- Brand voice is maintained

- Agent completes primary tasks

- Information collection works

- Required data is gathered

- Workflows follow correct steps

- Agent refuses prohibited topics

- Escalation triggers work

- Boundaries are respected

- Compliance rules followed

Tool Testing

User must test each enabled tool: End Call:- Triggers on “goodbye”

- Asks if anything else needed

- Ends call properly

- Transfers when requested

- Explains transfer reason

- Connects to correct destination

- Collects email address

- Confirms before sending

- Email actually sent

- Checks availability

- Presents options

- Confirms booking

- Sends confirmation

- Data sent correctly

- Handles responses

- Errors handled gracefully

- Retrieves correct information

- Presents naturally

- Handles missing info

Conversation Paths

User should test: Happy Path:- User knows what they want

- All information provided clearly

- Task completes successfully

- User mumbles or speaks unclearly

- Background noise present

- Multiple intents in one sentence

- User provides wrong information

- User changes mind mid-conversation

- User asks off-topic questions

- User remains silent

- User interrupts frequently

- Tools fail or timeout

- Missing required information

- System errors occur

- User gets frustrated

Voice & Language

User must test: Voice Quality:- Pronunciation is clear

- Pace is appropriate

- Tone sounds natural

- No robotic artifacts

- Correct language detected

- Accents understood

- Regional variations handled

- Multilingual if configured

Testing Checklist

User can use this checklist: Pre-Test:- Agent configuration saved

- All tools configured

- Identity, Tasks, Guardrails set

- Voice and language selected

- Agent greets appropriately

- Understands user intent

- Follows conversation flow

- Uses tools correctly

- Handles errors gracefully

- Maintains personality

- Respects guardrails

- Review call transcript

- Check tool execution logs

- Verify data collected

- Note improvements needed

- Test again after changes

Common Testing Mistakes

After Testing

User can:- Review test results

- Identify improvement areas

- Update agent configuration

- Re-test changes

- Run simulation tests for comprehensive testing

- Deploy to production when satisfied

For comprehensive testing with multiple scenarios, use Simulation Tests to run automated test suites.

Testing Workflow

Initial Testing:- Configure agent

- Run web call test

- Test basic conversation

- Fix obvious issues

- Test again

- Test all features

- Test all tools

- Test edge cases

- Get feedback from team

- Run simulation tests

- Final web call test

- Phone call test

- Multi-user testing

- Stakeholder demo

- Production deployment

Next Steps

User can:- Run Simulation Tests for automated testing

- Configure Phone Numbers for production

- Set up Webhooks for integrations

- Review Call Analytics after deployment